Needless to say, Large Language Models like ChatGPT are incredibly powerful and valuable.

If you aren't in the habit of using them on a daily basis, I would strongly encourage you to tune in more closely, as they can add massive value to your life and business, so long as you know how to ask good questions.

From a generalised perspective, I have understood for some time, how valuable LLM's can be.

The thing which has been bugging me though, is how they can add value in a more localised and domain-specific context.

For example, a few years ago I built a real estate platform called Stacked, which helps people to manage their portfolios. How could I build a chatbot into the platform, which enables platform users to ask questions about their real estate portfolios and receive tailored responses?

This is where RubyLLM comes in.

The gem's documentation does a great job of outlining the steps you need to work through, in order to get up and running, so I won't labour the point here. In simple terms, you need to:

If you aren't in the habit of using them on a daily basis, I would strongly encourage you to tune in more closely, as they can add massive value to your life and business, so long as you know how to ask good questions.

From a generalised perspective, I have understood for some time, how valuable LLM's can be.

The thing which has been bugging me though, is how they can add value in a more localised and domain-specific context.

For example, a few years ago I built a real estate platform called Stacked, which helps people to manage their portfolios. How could I build a chatbot into the platform, which enables platform users to ask questions about their real estate portfolios and receive tailored responses?

This is where RubyLLM comes in.

The gem's documentation does a great job of outlining the steps you need to work through, in order to get up and running, so I won't labour the point here. In simple terms, you need to:

- Install the gem

- Configure your API keys e.g. OpenAI

- Create a Chat, Message and ToolCall model

- Run the associated database migrations

- Wire up a basic UI, using Hotwire and Turbo, so you can experiment with asking questions and receiving responses.

After completing these steps, you'll have a chatbot, which you can start playing around with.

At this point, if I were to ask the chatbot a generic question, such as "What is the best restaurant in London?" I would receive a suitably generic response, in much the same way that I would if I were to ask ChatGPT directly, which is exactly what I'm doing, albeit via my application.

Where things start to get interesting and valuable, is when I ask a domain-specific question, such as "What is the projected value of my property portfolio in 10 years time?"

This is not a question the chatbot is able to provide a helpful answer to, because it doesn't know which properties I own.

So, we have a generalised chatbot, which lacks localised and domain-specific knowledge.

How do we fix this problem?

This is where Tools come in.

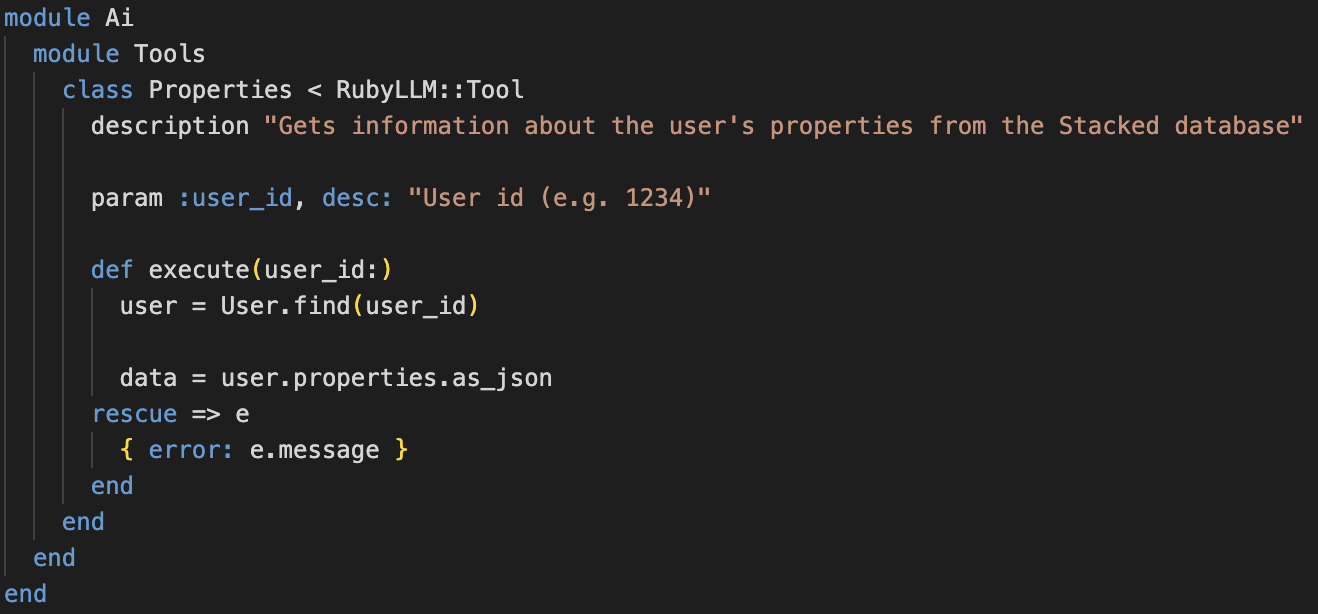

For example, we could create a Properties Tool, which fetches information about the properties that a particular user owns.

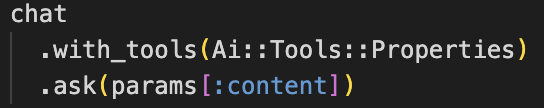

Having created this Tool, we can then make it available to our chatbot, so it's empowered to provide more informed and helpful responses.

Hey Presto .... we now have a localised chatbot, which is able to provide helpful responses to domain-specific questions.

There is no limit to the number of Tools we can create and make available to our chatbot, as we work to expand the scope of it's intelligence.

Needless to say, there are a raft of important considerations when it comes to protecting sensitive data, building a robust test suite and ensuring that access to these chatbots is tightly controlled from an authentication / authorisation perspective.

With that being said, by combining the RubyLLM gem with a set of domain-specific tools, you can supercharge your Rails app, in all manner of exciting ways.

Happy coding!