# Getting Github Copilot to Generate a Generative Music App

# Idea

Originally, I asked Github Copilot to create a generative music app using Hugging Face NPM modules that could run across web, iOS, and Android using React and React Native. A user should be able to input a prompt for what kind of music they want, the model should live locally to the app, there should be a play/pause button, prompt history, and the ability to rewind (so some audio history as well), because the music should be generated more or less continuously.

I wanted to do as little coding as possible. I'm a busy person.

# The Result

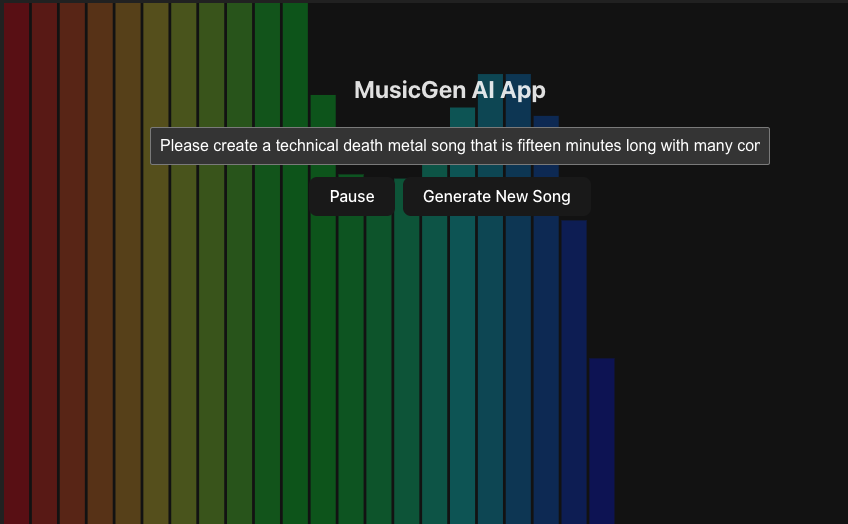

Github Copilot performed horribly. It didn't use the right Hugging Face NPM modules (sticking with only using @Huggingface/Inference), it created a Vite React project for the web, but no React Native dependencies beyond an audio visualizer, and the app didn't work on multiple levels. I asked it make a wave form visualization and it took that differently than I had intended, first just making a static sinusoidal visualization unrelated to the audio, then after further prompting literally converting the audio so it could create a static image of the audio's wave form. I scrapped that in further prompts for a real time rainbow colored equalizer which Copilot handled much better. Additionally, Copilot implemented no user feedback such as a loading indicator, and it failed to update the README as we went along.

With some further prompting and some work on my part sorting out what I actually needed from Hugging Face, I did end up with a working, though still buggy, web app. It has the following features:

- User can generate music clips based on user input prompts. The clips are only about 15 seconds long. I don't know much about the model used, but that's what I got out-of-box with it.

- User can play/pause the music clips.

- Music clips repeat indefinitely. I asked Copilot to add this feature, because the music clips are so short.

- User can see a loading indicator as the music is being generated. I asked Copilot to add this feature, because 1, it didn't on its own, and 2, the model takes minutes to generate a single clip. Despite multiple iterations on this loading indicator feature, Copilot never got it working well.

- User can see a bar-graph equalizer visualization that updates in real time as the audio clips play. The bars are rainbow colored and the equalizer fits the viewport with the user interaction elements floating on top.

- Copilot was little help with Hugging Face. On my own I found the Xenova/Musicgen-small model on Hugging Face's website. Copilot didn't know a Xenova model is needed for compatibility reasons with Hugging Face's NPM modules. I downloaded the model because I want it to run entirely locally and I had to provide specific prompts to Copilot with the musicgen-small folder and its README in order for Copilot to wire it up. Still, it couldn't fix simple errors related to @Huggingface/transformers not finding the local model, which I fixed myself.

# Conclusion

Unfortunately, the app's UX leaves a lot to be desired and generating the short music clips takes way too long. It's not a particularly useful app. There are plenty of future refinements that could be made, but I'm not sure Copilot is exactly up to the task for all of it. It's important to remember that the prompts you provide to Copilot go a long way toward determining the response you get back. Additionally, all tools have trade offs and at a certain point Copilot looses the thread on lengthy tasks or makes assumptions or hallucinations in gray areas even on shorter tasks. Currently, adding AI to your development process absolutely requires detailed code review by people and involves fixing plenty of unexpected, sometimes trivial bugs. Still, using Copilot was interesting because it allowed me to do more multitasking at times. For example, I could look up Xenova models while Copilot fixed an error or worked on a small UI feature. I can see it as a way to create working mock ups quickly. Leveraging it for larger and more complex projects seems limited to an as-needed basis. For example, mocking a new feature or refactoring something specific with limited scope while you work on something else.

# Steps to Run

- Git clone my repo: https://github.com/cipherphage/Copilot-Generated-Generative-Music-App

- In /models git clone the Xenova/Musicgen-small repo by following the instructions here: https://huggingface.co/Xenova/musicgen-small/tree/main

- Run

npm install

- Run

npm run dev