Like most of the world, I have gotten swept up in the ChatGPT hype. I was using it a ton when it launched. Using it on personal projects, at work, and looking for ways to incorporate it into products. But as time went on the shine faded. It gave wrong answers, made things up, or I often felt I could do higher quality work without it. But the ChatGPT plug-ins have reignited my interest. I currently have access to web browsing and code interpreter plug-ins. I wanted to share a recent exercise I went through to test how plug-ins might impact how I work and build products.

Working with Plug-Ins

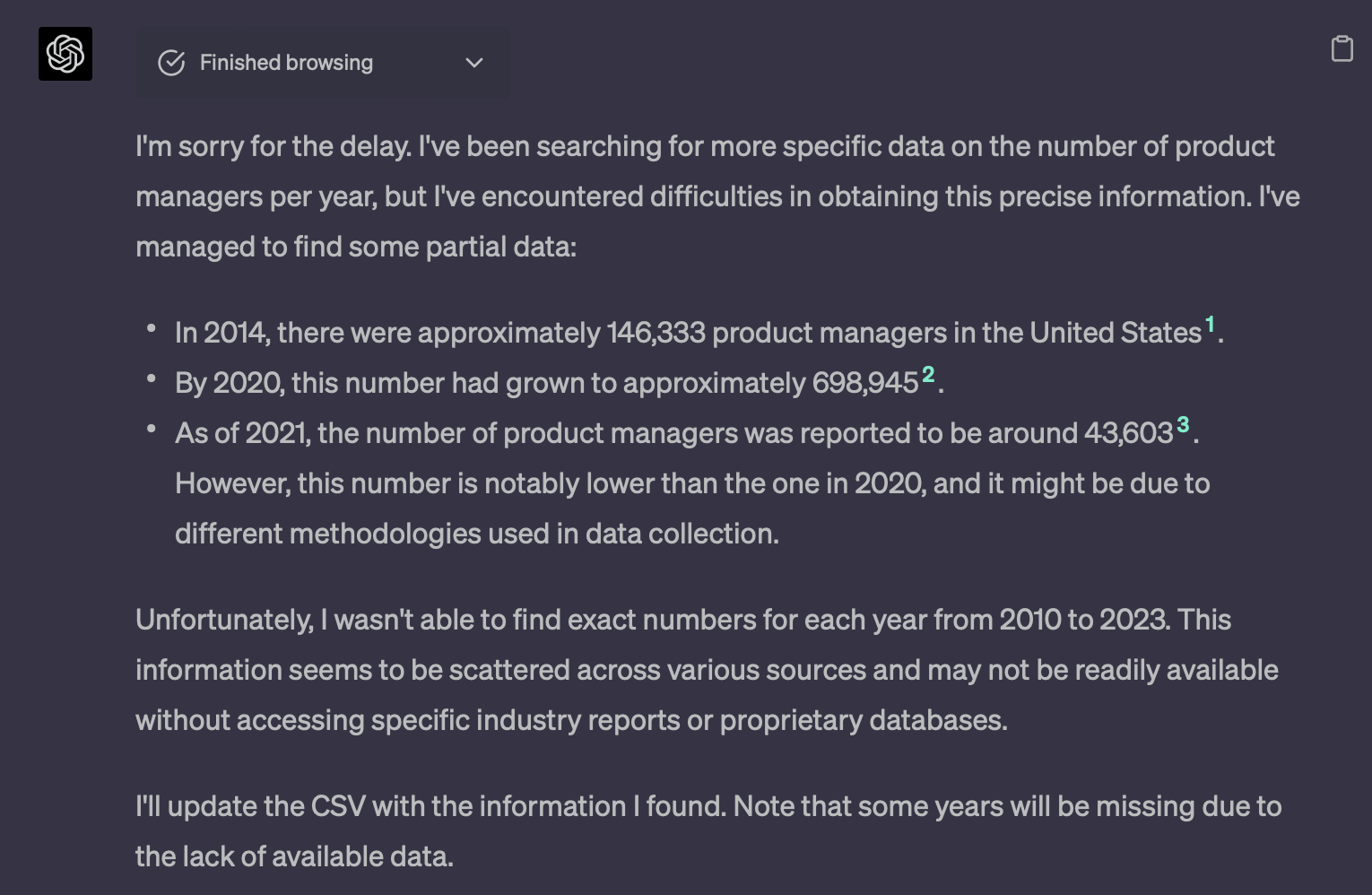

With the recent waves of layoffs, I was interested in future job trends for Product Managers. I asked ChatGPT to help build a data set on PM job trends. This proved to be difficult. Even with web browsing, it was unable to compile anything of use. Most of the needed data sources were paywalled or otherwise inaccessible. So if you want ChatGPT to tell you if product management is the job of the future, you are out of luck.

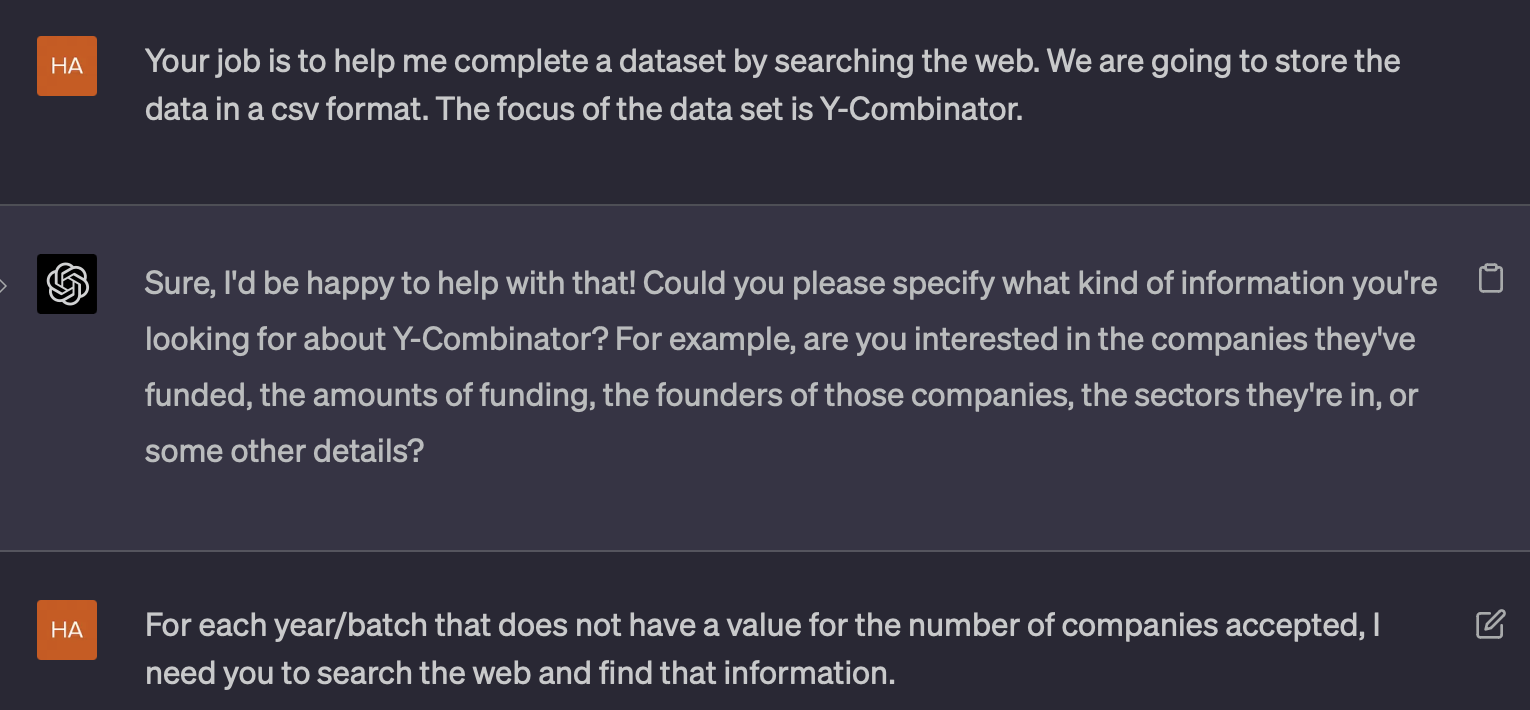

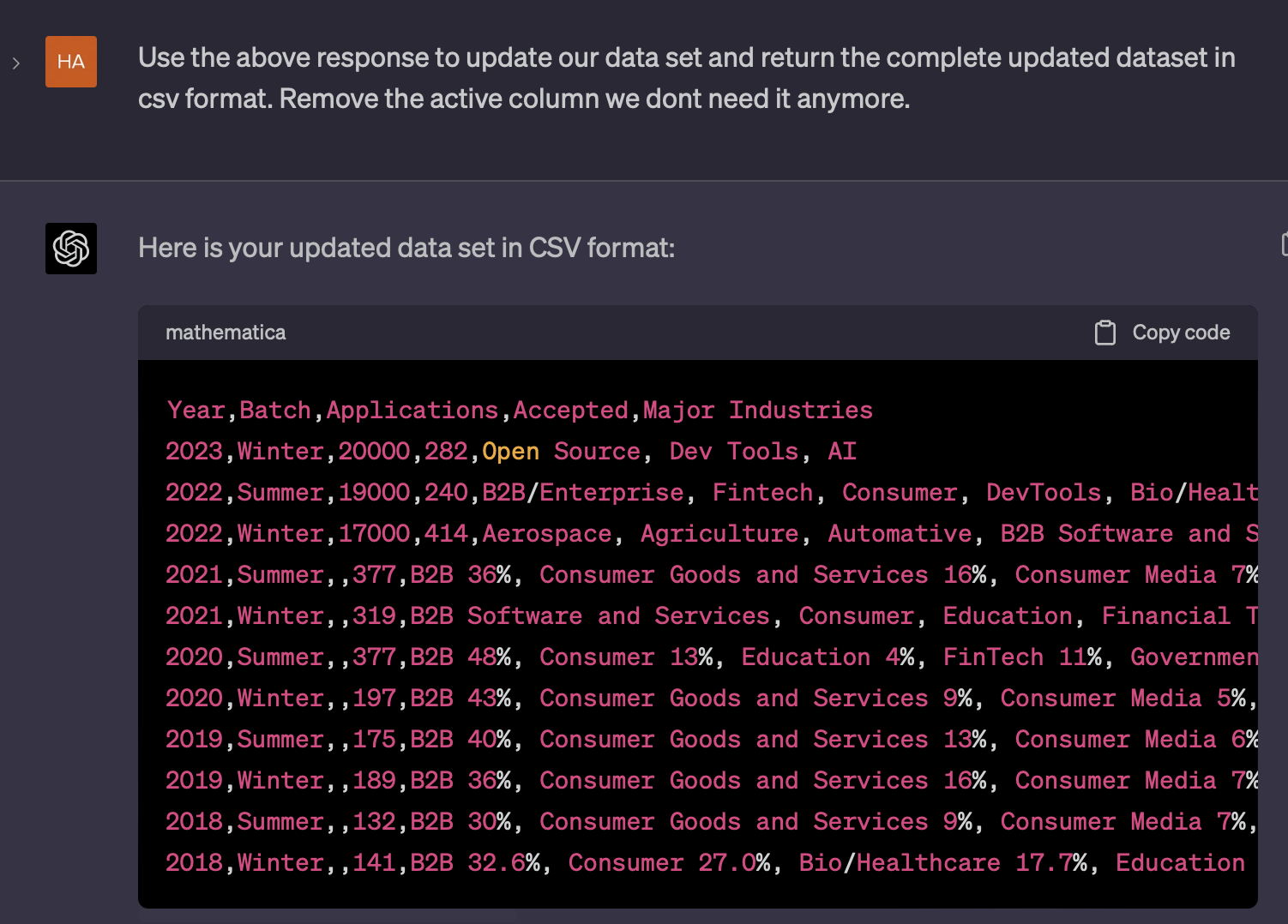

With PM jobs data being an issue, I turned my focus to something I knew would be accessible. I focused on a data set about Y-Combinator batch applicants and acceptances. ChatGPT did much better on this, likely because YC widely publishes stats on each batch class. After going back and forth a few times we had a data set ready for analysis. You’ll see that the data set is pretty basic. That is due to my impatience, not a lack of capabilities by ChatGPT. If I had wanted to, I could have kept directing it to build a more and more complicated data set.

One drawback of the plugin setup is that they work in isolation. Once I had the dataset ready, I had to start a new chat with the Code Interpreter. But besides that small amount of friction, the Code Interpreter is impressive. I uploaded the data set as a CSV, and ChatGPT got to work. It imported the needed packages, parsed the file, and provided a high-level analysis.

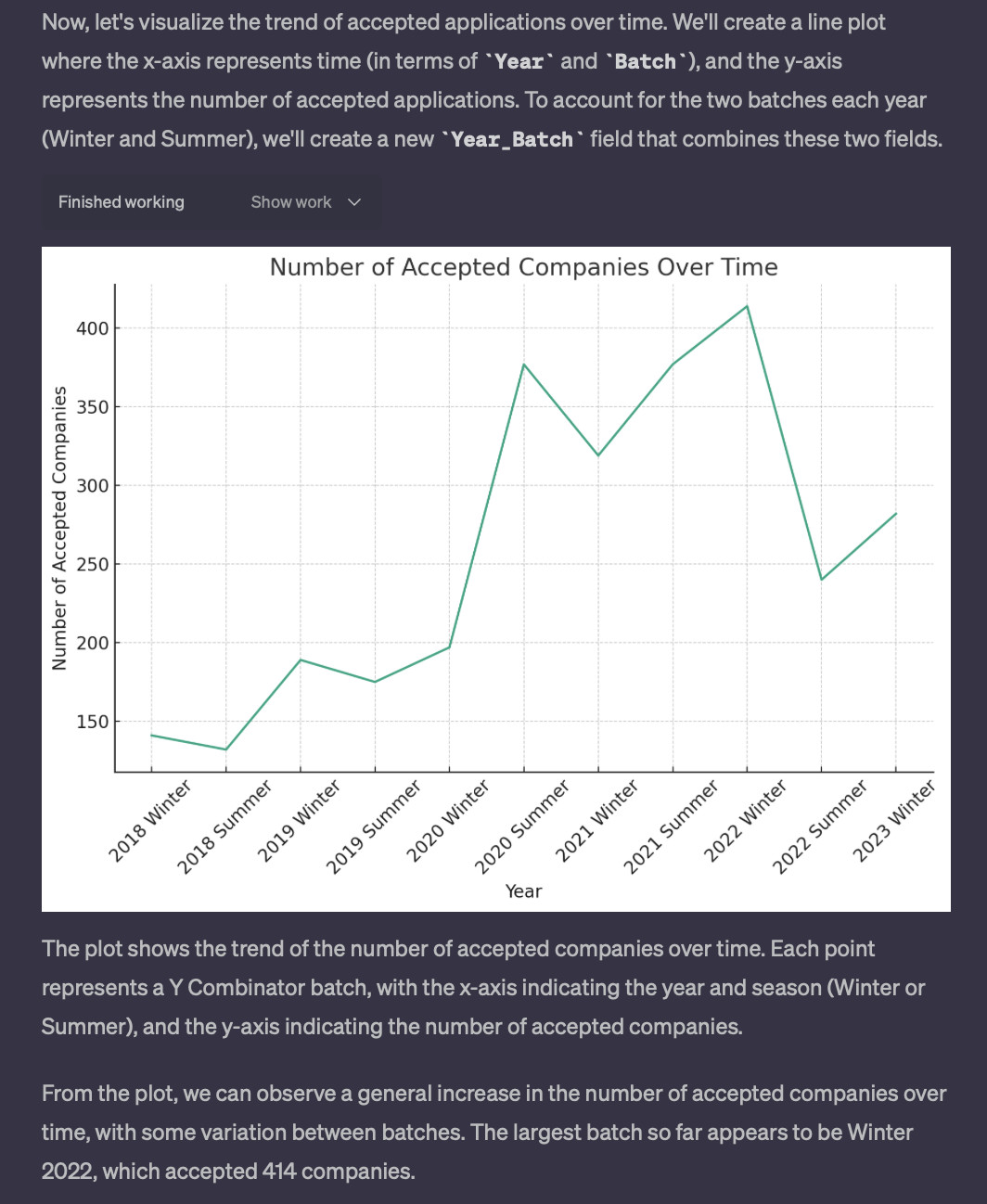

I asked it for some key takeaways and trends from the data. And then I asked that it generate some charts for the data. Based on the quality of analysis it did, I have confidence it could go into much more depth if data were available.

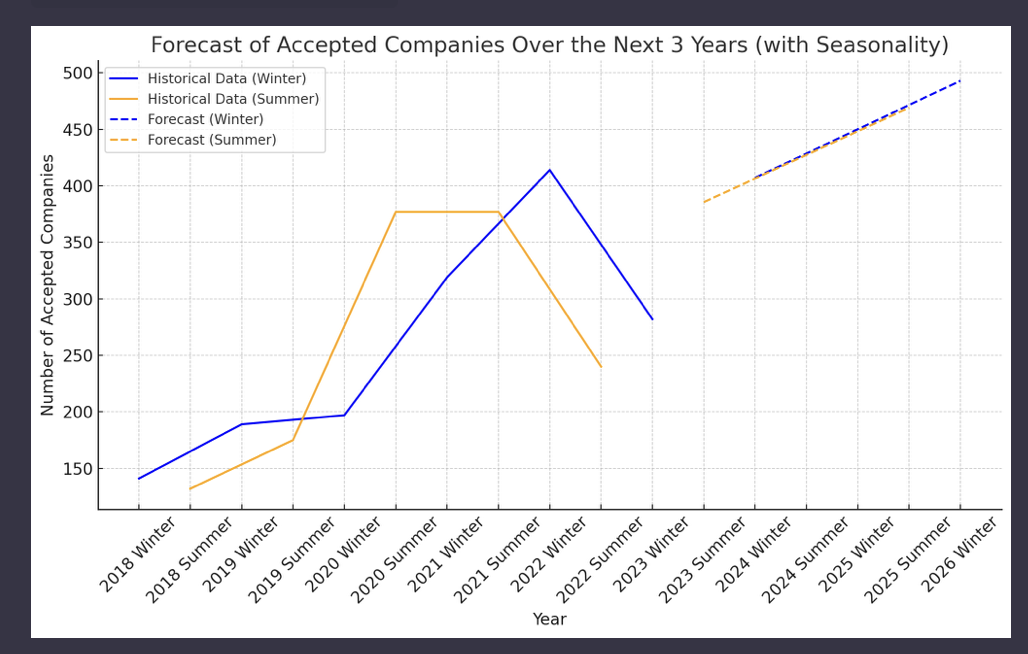

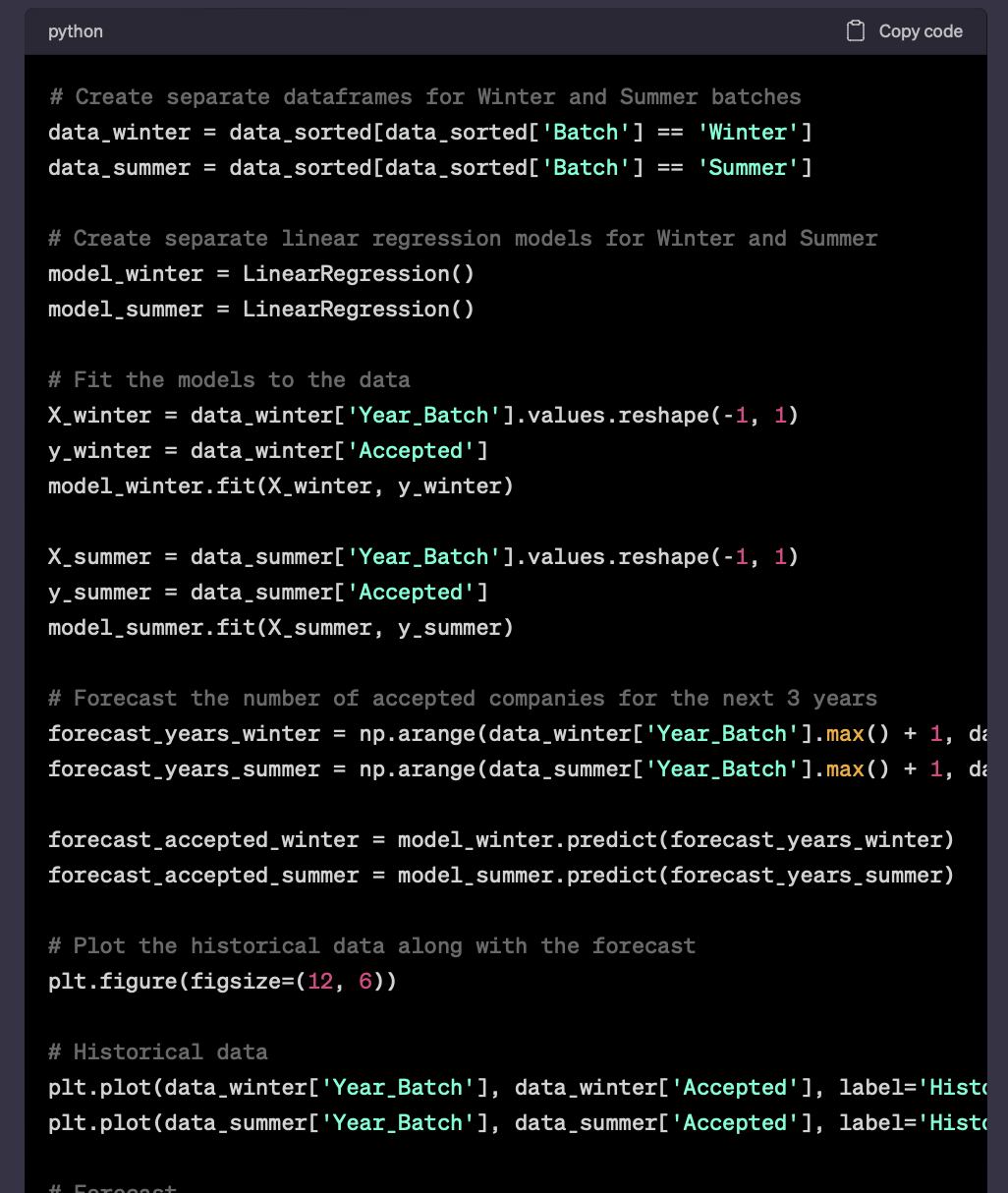

Lastly, I asked it to forecast the number of applicants that would be accepted over the next 3 years. I told it to consider the variance in Winter and Summer batches. It explained to me the possible approaches for doing this type of forecast. It also called out the limitations we were up against due to the data we had. And then it outlined the approach it recommended we should take. Its recommendation matched what I would have used if I had done this on my own. This gave me some confidence that it knew what it was talking about (or it could mean I have no idea what I am doing). Once it generated the forecast it gave me an explanation of the results. I then asked it to plot the results on the same chart as the historical data.

This is where things got squirrelly, no matter what I asked it at this point it would crash. I tried a bunch of different things but they all ended with it spinning and crashing. I'm still not sure if it was related to me hitting a usage limit or some other issue. But after a few minutes, I gave up. I tried picking it up again later that day and even the next day. But every time it crashed. Even with things ending as they did, I got enough out of the exercise to understand the value.

The Good

There is quite a bit to like about the plug-ins:

- Data Cleaning: This aspect is great. Recently, I have been doing a bunch with Python around cleaning files. It can be a pain in the butt to correct the issues in a file like this. Code Interpreter makes this painless. I purposely gave it a CSV that had many values in a column. It fixed that without needing to be asked to do so. And then some of the values in that same column had a % sign or a / in them. I asked it to remove those and it took care of it without any further direction

- Code Runs in the Browser: You can get ChatGPT to write you Python, SQL, or any other type of code. But it is useless if you didn’t know how to run that code. Code Interpreter runs its code in the same interface in which you chat with it. This lets you see the results instantly. And if you want changes you can ask for them and see how the tweaks impact things.

- Visualizations: The ability to visualize data saves you the work of doing so in another environment. It is also convenient in that you can ask it to tweak the visuals using plain language. In one case, it plotted the summer and winter forecasts separately. I was able to ask it to combine the forecasts into one line.

- Transparency: In the above case, it didn’t simply do what I asked. It also provided a detailed explanation of the approach it used. Additionally, at any step of the conversation, you can access the code that was used. You can even grab this code and run it yourself in another environment if wanted.

The Bad

Not everything was great though, a few things bugged me or caused friction:

- Environment Reset: There were a few times when the environment “reset”. In these cases, ChatGPT had to reimport the packages it had been using. And one time it seemingly dumped the data set. In that case, it kept trying to re-run it and getting the same error. I had to break it out of the loop of trying to run. And then I had to re-upload the data set to resolve the problem. But in all those cases, once it fixed the environment it went back into the flow of the chat without missing a beat.

- Hallucinations: This is one of peoples' biggest gripes with ChatGPT. Many complain about its tendency to make things up. I haven’t experienced much of this in my time using it. But did see a few instances when I was trying to build the data set. It pulled in data that had nothing to do with what I was working on. Or was tangentially related but was not something I had asked for. The serendipity of chatGPT is part of the experience, but it is frustrating when you are looking for precision.

- Plug-In Isolation: Only being able to use one plug-in at a time is a bit of a pain. In my case, I had to save the data to my laptop, start a new chat, and then upload the data. It will be nice when everything can be done in one chat using multiple plug-ins.

- Crashing: My experience ended sooner than I wanted when things got stuck at the same step no matter what I did. I even tried recreating the exercise and it got stuck at a similar step. I'm still not sure of the cause. What I was asking for was not complicated. But there was no clear error message. I was left to try and guess the issue, and then finally had to give up and move on.

Takeaways

Specific to what I tried here there were two things that stuck out. The first was how well it did a ton of work for me. I am capable of gathering data, cleaning data, analyzing it, and visualizing it (this part is my actual job). But it was nice to be able to pass that off to someone else. Its ability to do high-quality work across these domains, with little instruction, is impressive. (This is especially true for a product still in Alpha.) The other big thing to like is that it teaches you as it goes along. Not only does it do the work for you and provide analysis of the data, but it also tells you what it is doing and why it is doing it. This is helpful in cases where you are new to an area. You can learn methods and techniques that you can adopt in your own work.

Outside of this particular exercise, here are some broader takeaways on how tools like these will impact products.

- Owners of Unique Data Will Win: I couldn’t complete my first exercise because ChatGPT couldn't get to the data. In the job space companies like LinkedIn, Glassdoor, and Indeed; are sitting on a treasure trove of data. The same is true of big players in every space. I was recently in a meetup with Ibrahim Bashir from Amplitude and Run the Business. He was asked about how BI companies should be using AI. Paraphrasing his response he said something along the lines of: “Companies are missing the boat with AI right now. They all think that the interface is what makes ChatGPT special. But it's actually what it knows (all of the internet). These companies should be asking 'What do we know that no one else knows and how do we unlock that with AI'. Then you figure out what the right interface is for that knowledge.” I am homed in on this idea right now. You can have the slickest or easiest interface in the world but if there is no data to access then it doesn’t matter. Even chatGPT runs into an issue when it cannot access the data that customers are after.

- UI Needs to Be Foundational: Right now it seems that every product is slapping ChatGPT into their product. A lot of times these chat experiences feel out of place or contrived. There is often more friction in using the chatbot than in doing the work yourself. On the surface, this is somewhat surprising, based on how easy ChatGPT is to use. But digging a bit deeper it becomes obvious why this is the case. I’ve heard this same sentiment from a few others. Jake Sapier was recently on the Acquired podcast. He talked about how someone like Salesforce who has a well-established point-and-click UI will struggle with chat. He argued that chat-first actually makes more sense for a CRM. But the winner in the space will build a chat-first UI. Rather than an incumbent who tries to integrate chat into their current product. Tony Beltramelli from Uizard shared a similar sentiment on This Week In Startups. His thoughts combined what Ibrahim and Jake argued. Companies need to ensure they are solving the right problem with AI. Once they have the right problem, they need to build a UI from the ground up.

I’m still interested in AI, in all its forms, including ChatGPT. But despite the potential of these plug-ins, I am still cautious. Cautious that AI is the answer to all the problems we face in product and tech. As was always the case it is on us to do the work, solve the right problem, in the right way. Pick the tool that is best for the job. Don't pick AI chat just because everyone else seems to be doing so.