Important note: at the time of the publishing of this article, Anthropic announced via email that they are retiring claude-3-sonnet-20240229, used in the examples. Claude Sonnet 4 (claude-sonnet-4-20250514) should be used instead.

If you are reading this post, likely you are, or at least interested, in building LLM based applications.

I'm quite fond of having a quick development cycle based on my "flavor" of TDD, where I write tests that cover only the core functionality of my code. I love writing tests that prove that the application behaves as expected as a whole. Have you heard about component testing? Big fan here!

A component is a cohesive group of related functions, classes, or modules that work together to provide a specific capability or service within a larger application. It has well-defined interfaces (inputs/outputs) and can be developed, tested, and potentially deployed independently of other parts of the system. Examples include a payment processing component, a user authentication component, or in our case, an LLM interaction component that handles prompts, routing, and responses.

Very likely, whenever someone is thinking about developing an application that uses a LLM, they are thinking on using LangChain, a "framework" for building applications using LLMs. The libraries are becoming very comprehensive and I find them well documented — a no-brainer if you ask me.

Now, if I wanted to test my application's interaction with a LLM without resorting to end-to-end testing (therefore, avoiding consuming LLM API credits), I would need to "simulate" an LLM response. Luckily, LangChain makes it easy by providing a LLM mock.

A simple sample

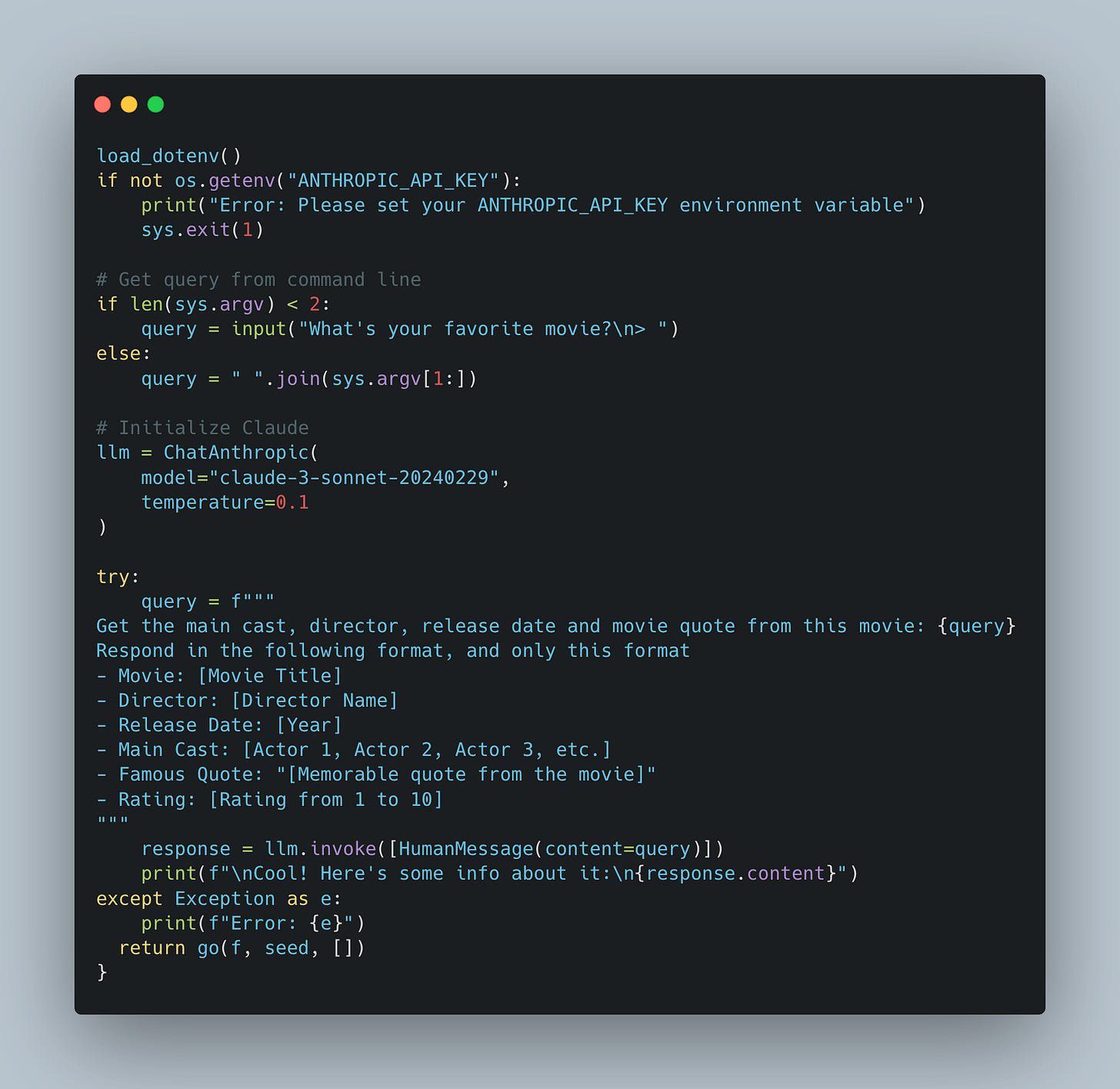

Let's take this simple script:

There's nothing too special about these few lines of code. All it does is get a movie title from the command line, and then uses LangChain to get a response from Claude in a particular format.

Now, I could just run this script and see the output, and it would all work fine. But, what if I want to get the response from the LLM in a structured format that I can use later to compose my own response?

Structured responses from LLMs

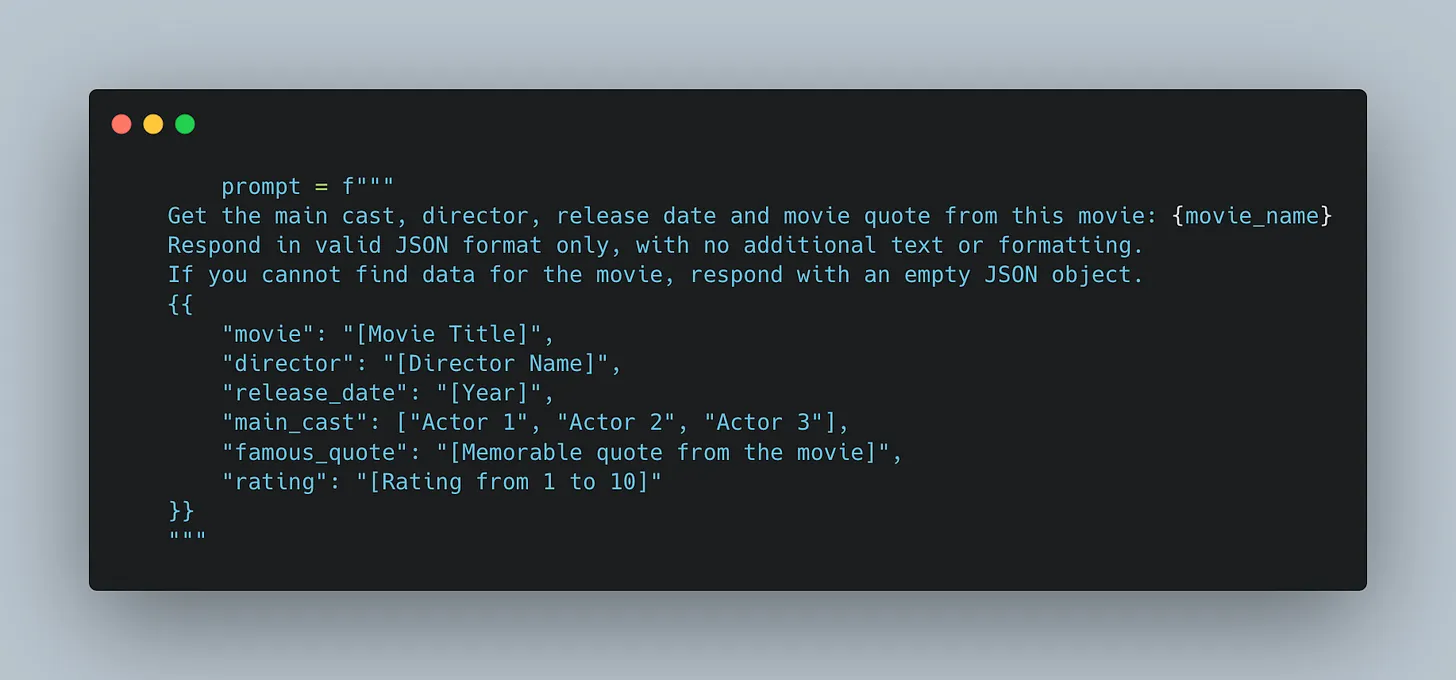

A very typical technique is to ask the LLM to return a JSON object by providing an example. For example:

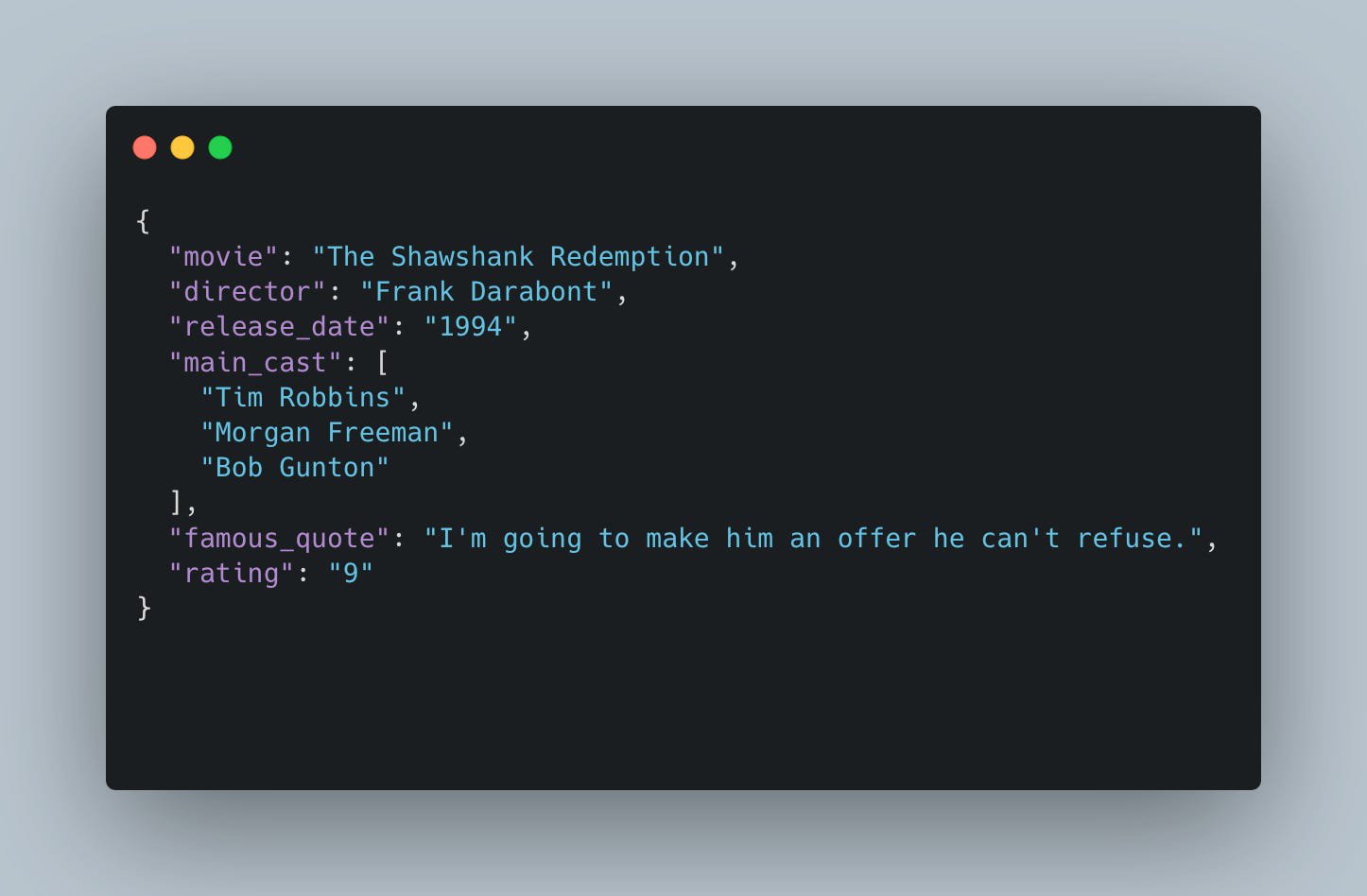

The LLM should (remember that they are non-deterministic) return something like:

Now, I can easily parse this JSON object using, for example, pydantic and use it for my own purposes, but I need to modify the function that queries the LLM to verify and parse the response.

- Problem: I don't want to execute the code against the real LLM API. It is slow, and - call me cheap - I don't want to consume my credits in tests.

- Solution: use LangChain's fake LLM helper to simulate the response.

Let the testing begin

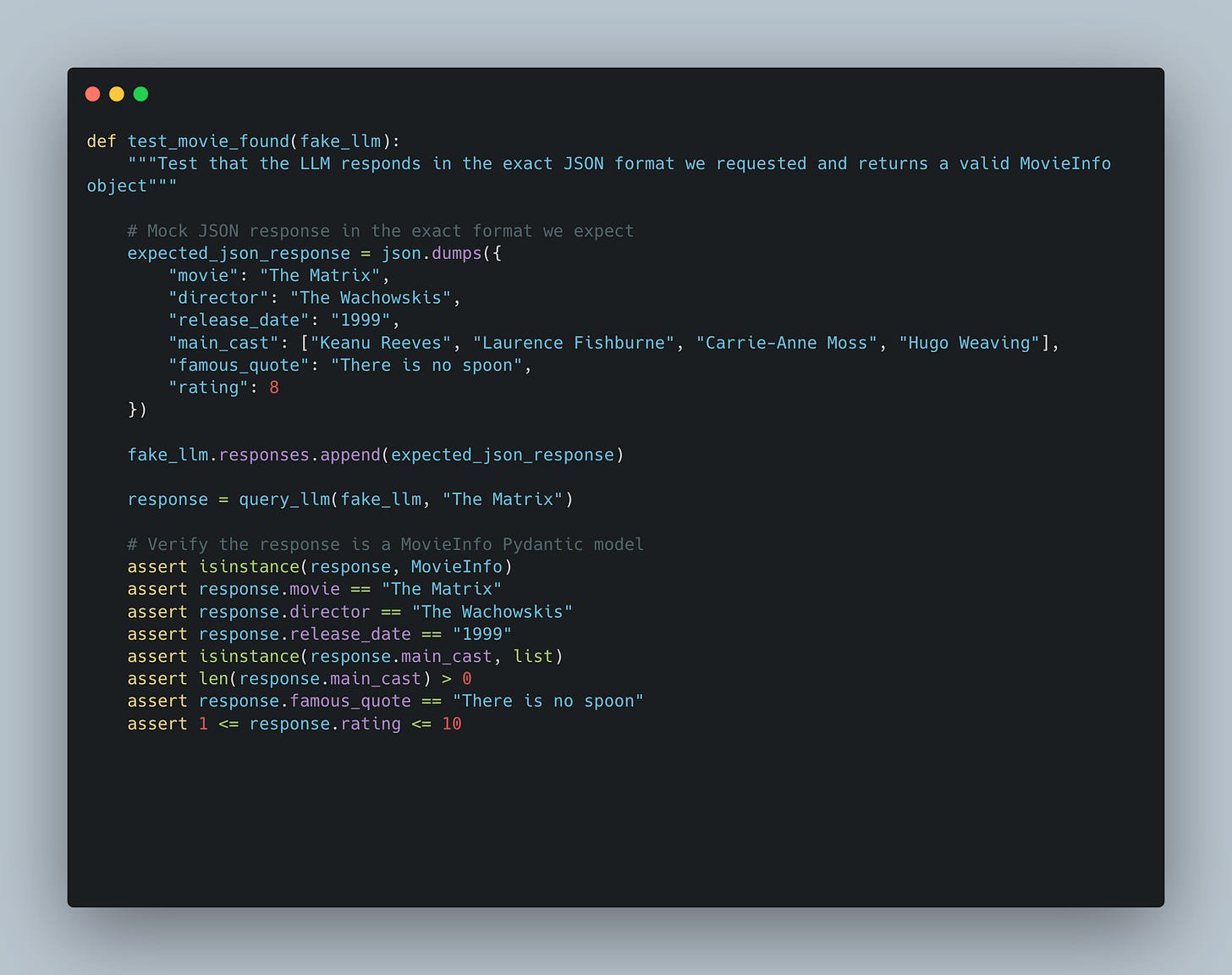

Since I love TDD, I start by writing the first happy path test:

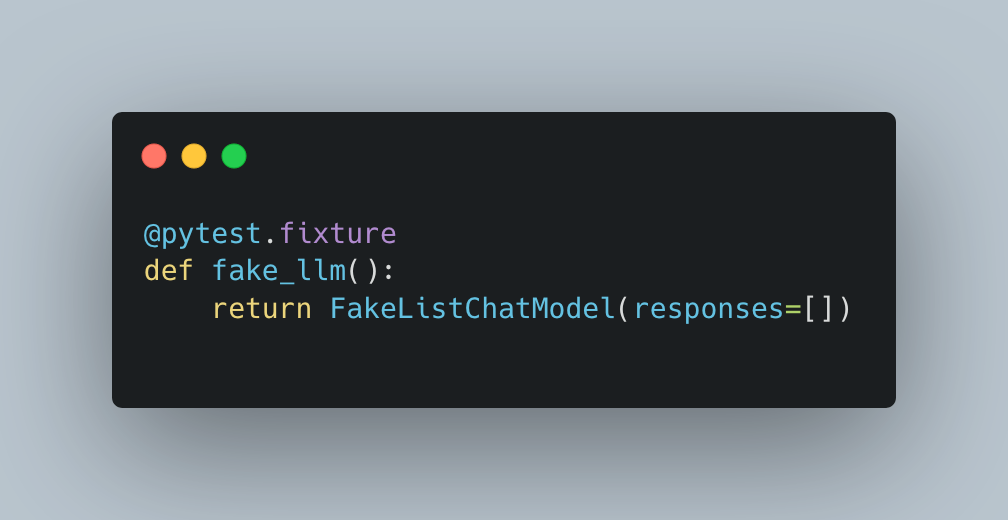

To run this test, I need to instantiate the FakeListChatModel. That I do by using a fixture function that returns the model object, since I'll be reusing it in multiple tests.

Now, I'm ready to start refactoring the application code!

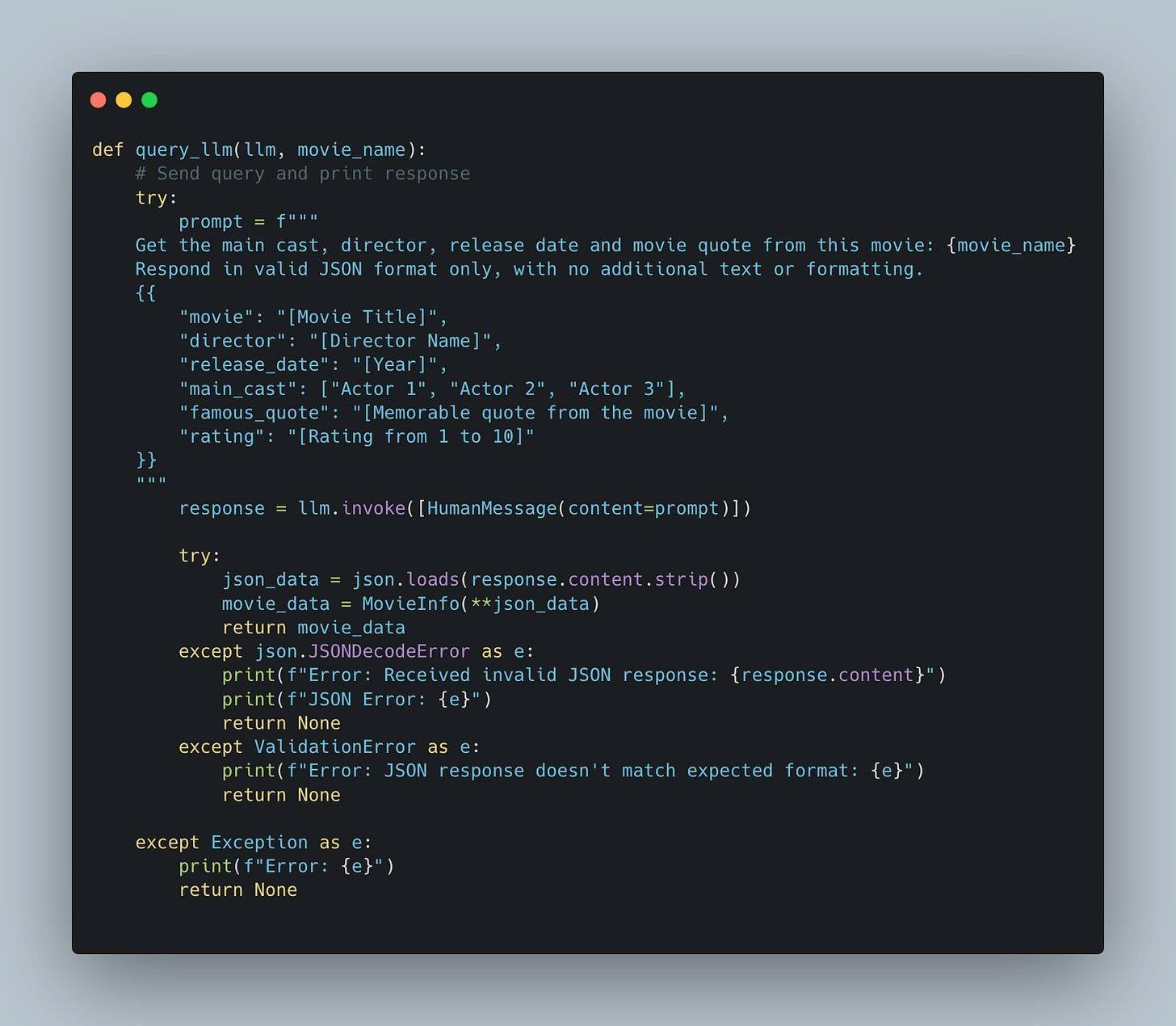

As a first step, I need to refactor the logic that calls the LLM into its own function that takes a llm object as an argument:

Notice that now I'm passing the llm object as an argument to the function. This is on purpose, so now I can easily use dependency injection to pass a mock LLM into the function.

Awesome. The first test pass, so I can move on to the next step.

"Wait... what movie???"

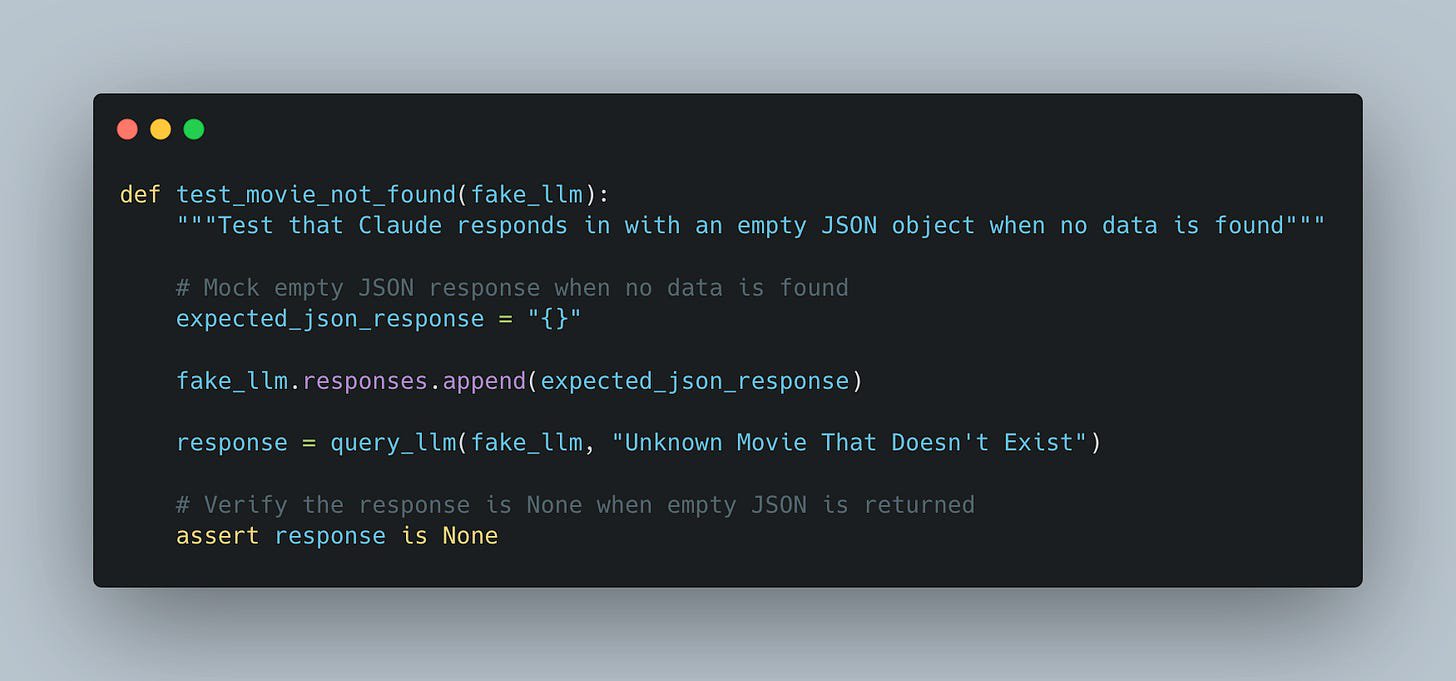

It's all good and dandy when the LLM can find the movie you requested. But what if it can't find the movie? If the LLM returns a text saying something like "I don't know about that movie", the application will fail since it cannot parse that text as a JSON object.

Following the principles of TDD, I need to write a test that covers this scenario.

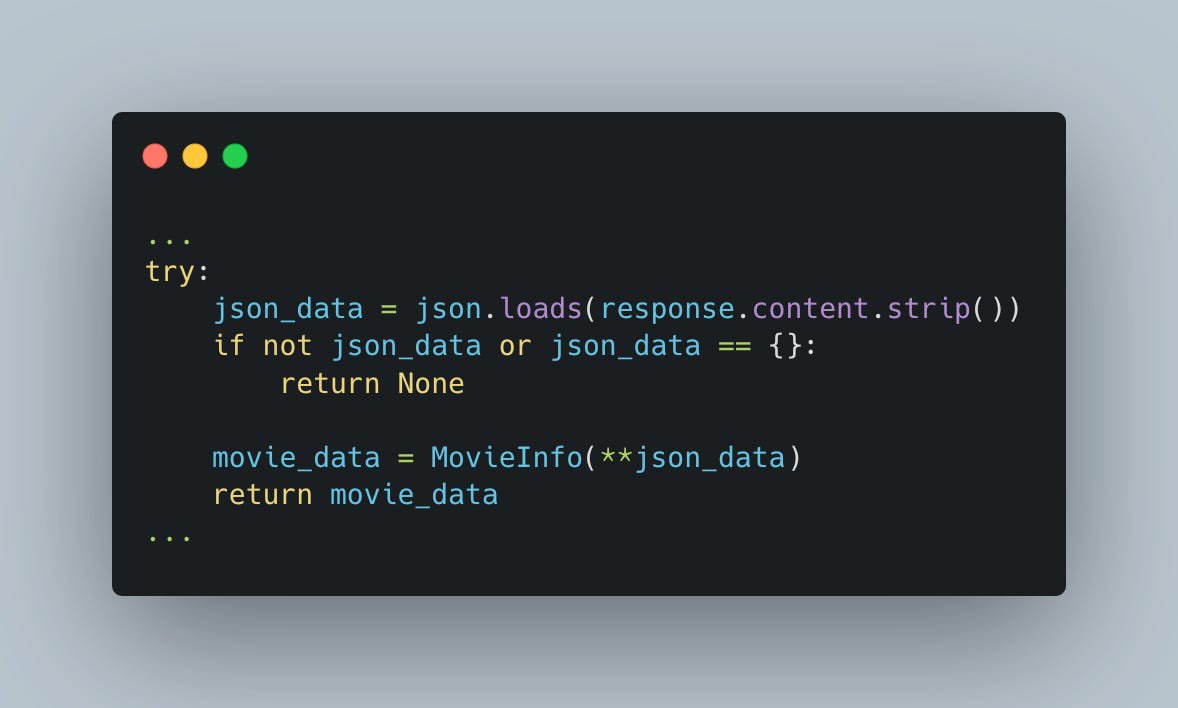

The first pass, of course, fails as expected. Now, let's add just a couple of lines to the application code:

With this two lines, I'm checking that the JSON response is not empty. If it is, I return None to indicate that no data was found.

The test now passes, and the application now can handle both scenarios.

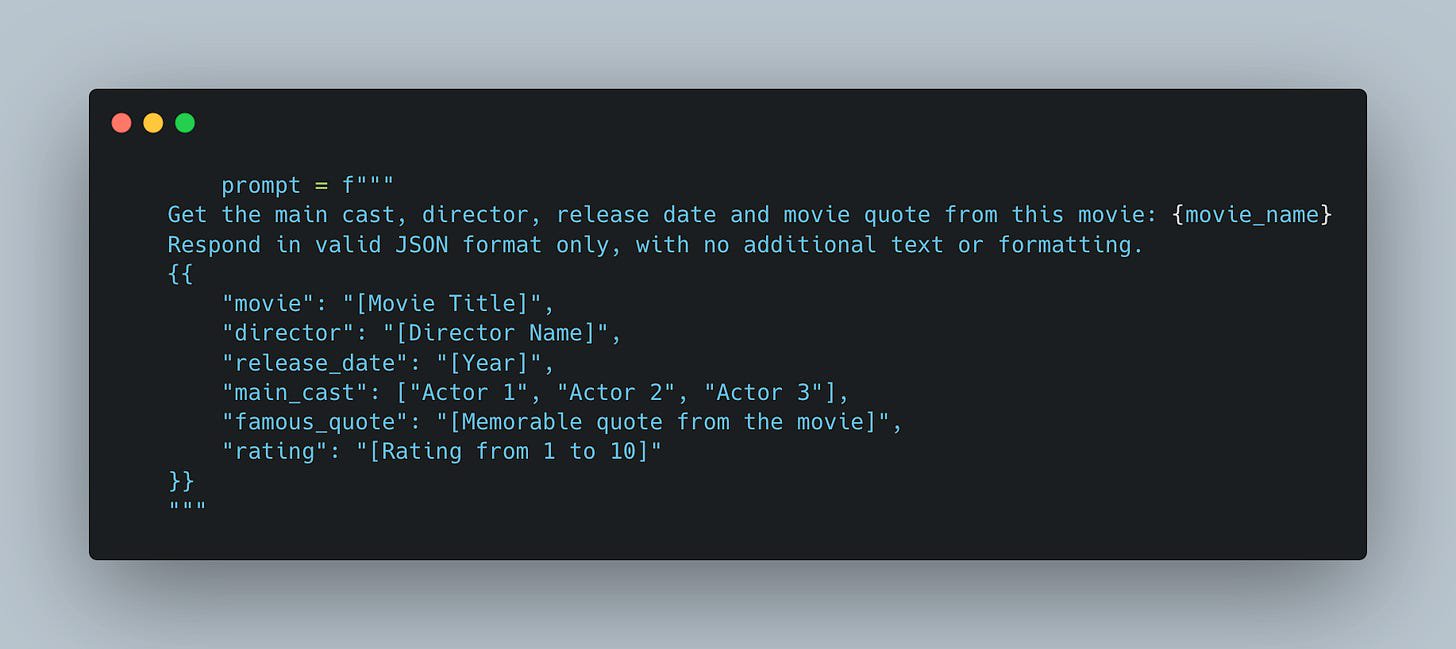

There is one last step to cover: adding the right instruction to the real LLM to make it return empty JSON when no data is found:

Of course, I'll have to modify the main function code to call query_llm with the real LLM object, but that's out of scope for this example.

And that's it! Now you can run the application with the real LLM and see that it works as expected!

Conclusion

LangChain's fake LLM helpers makes it very convenient, fast and a very powerful tool that can help you write more robust and reliable code and, in the process, save you some money, a headache or two, and lots of time.

Check out the code here!