I’ve been deep into AI code generation for the past several months, and I believe I have something worthwhile to share. At some point I even got scared of AI capabilities. I even told my good friend who is a software developer to start practicing how to farm (it was a joke, fellas). This information may become outdated quickly, as AI evolves at the speed of light - but here we go anyway…

As of 2025, AI code generation tools initially seemed like overhyped autocomplete on steroids. The generated code often looked impressive in demos but fell apart in real-world scenarios - inconsistent patterns, missing edge cases, and logic that worked for simple examples but failed under complexity. However, combining AI with Test-Driven Development fundamentally changes this equation. When tests define the requirements upfront, AI code generation transforms from unreliable magic into a systematic, verifiable process.

AI Code Generation Finally Makes Sense With TDD.

Recent research demonstrates that AI-assisted TDD improves code accuracy by 12-18% while reducing development time by up to 50%. More importantly, it addresses the core weakness of standalone AI code generation: the lack of clear, verifiable requirements that can guide and validate the output.

The Problem with Standalone AI Code Generation

Traditional AI code generation suffers from several fundamental issues. Without clear constraints, AI systems often generate over-engineered solutions that work for narrow cases but break when requirements change. The code may follow good practices superficially but miss critical business logic or fail to handle edge cases that only become apparent during testing.

GitHub's internal data shows that while 25% of Google's codebase is now machine-generated, the most successful implementations follow structured patterns where AI works within well-defined boundaries. Unstructured code generation - asking AI to "build a user authentication system" produces inconsistent results compared to constraint-driven approaches.

The breakthrough insight from Mock et al. (2024) in "Test-Driven Development for Code Generation" reveals why TDD solves these problems: tests serve as executable specifications that precisely define what the code must accomplish, giving AI systems clear targets rather than vague requirements.

How TDD Transforms AI Code Generation

Test-Driven Development provides the missing piece that makes AI code generation reliable: comprehensive upfront specification. Instead of asking AI to interpret ambiguous requirements, TDD creates a detailed contract that code must satisfy. This shifts AI from creative interpretation to systematic implementation.

Test-Driven Generation (TDG) extends traditional Red-Green-Refactor cycles by using AI for both test creation from specifications and implementation generation. The key difference: every generated piece of code has immediate verification through pre-existing tests, eliminating the guesswork that plagues standalone AI generation.

Research by 8th Light on "TDD: The Missing Protocol for Effective AI Collaboration" demonstrates that AI works best within structured frameworks where tests act as "guard rails", preventing the system from generating overly complex or incorrect solutions while ensuring all requirements are met.

Building Specifications That AI Can Understand

The effectiveness of AI-assisted TDD depends heavily on specification quality. Vague requirements produce unreliable results, but structured specifications enable consistent, high-quality output. Effective specifications combine multiple information layers:

Hierarchical requirement organization separates concerns into system, component, and unit levels with clear interfaces. This structure helps AI understand scope boundaries and generate appropriate implementations at each level without over-engineering.

Formal constraints embedded in specifications eliminate ambiguity. Instead of "validate user input," specify exact requirements:

Email Validation Requirements: - MUST comply with RFC 5322 format specification - MUST reject common typos (missing @, multiple @@ symbols) - MUST handle international domain names correctly - MUST return specific error codes: INVALID_FORMAT, DOMAIN_NOT_FOUND - MUST complete validation within 100ms for 99% of cases

Multi-modal specifications prove most effective, combining OpenAPI schemas for interfaces, state diagrams for behavior, and natural language for business rules. This comprehensive context enables AI to generate code that satisfies both technical and business requirements.

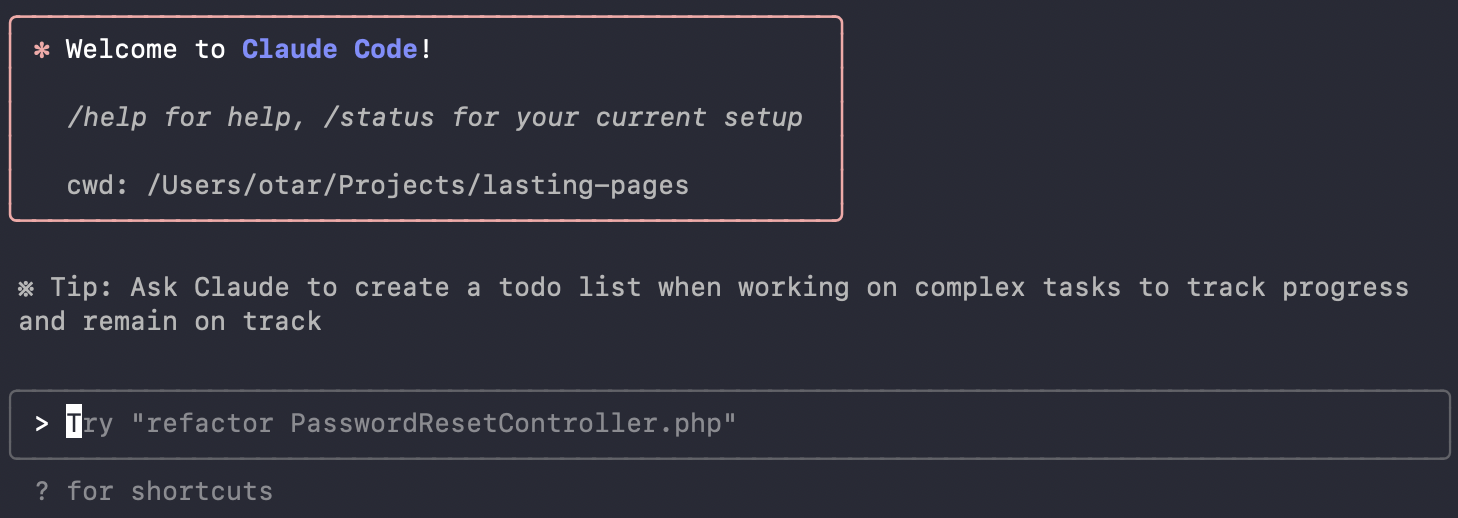

Claude Code: Where TDD Integration Excels

After testing various AI coding tools, Claude Code's approach to TDD integration stands out. The 200K token context (as of July 2025) window enables understanding of complex test suites and system architecture simultaneously, while terminal-based operation integrates naturally with TDD workflows.

Project initialisation with /init analyse existing codebases and generates CLAUDE.md configuration files that capture established patterns. This ensures generated code follows project conventions from the start, addressing one of the major consistency issues with AI generation.

The TDD cycle with Claude Code follows this pattern:

- Define comprehensive specifications with formal constraints

- Generate complete test suites that verify all requirements

- Run tests to confirm they fail appropriately (Red phase)

- Generate implementation code that satisfies test requirements (Green phase)

- Refactor generated code while maintaining test coverage

Custom command creation enables domain-specific patterns that improve generation quality. Financial applications can define compliance-focused test generation, while web applications might include accessibility testing patterns. This customization addresses another weakness of generic AI generation: lack of domain awareness.

Edge Cases: Where AI Surpasses Human Testing

One unexpected advantage of AI-assisted TDD is comprehensive edge case coverage. Research by Arbon (2024) on "Edge Case Testing with AI" shows that AI systems identify boundary conditions and exceptional scenarios that human developers commonly overlook.

Symbolic execution frameworks combined with AI analyse specifications to systematically explore all possible program paths. For input validation components, AI automatically generates tests for Unicode encoding edge cases, floating-point precision errors, buffer overflow conditions, and error state combinations that only occur when multiple systems fail simultaneously.

Property-based testing enables AI to derive mathematical invariants from specifications and generate test cases that systematically verify these properties. The TGen framework demonstrates that providing tests alongside specifications improves AI code generation success rates from 80-87%, with even greater improvements for complex scenarios.

Quality Assurance That Actually Works

Traditional AI code generation lacks reliable quality metrics, but TDD provides systematic validation approaches. Mutation testing verifies that generated tests can actually catch defects—if tests cannot detect intentionally introduced bugs, they will not catch real problems.

Research on "Property-Based Mutation Testing" shows how AI systems generate targeted mutations that focus on areas most likely to reveal test weaknesses. This approach validates both the generated tests and the implementation code, creating a feedback loop that improves quality systematically.

Test effectiveness prediction uses machine learning models trained on historical defect patterns to assess the likelihood that test cases will find real bugs. This predictive approach helps prioritize testing efforts and identify areas where additional coverage provides the most value.

Continuous evolution enables AI systems to learn from production failures. When defects escape initial testing, AI analyzes the failure patterns and automatically generates preventive tests, creating a system that improves over time rather than degrading.

Economic Reality Check

The economics of AI-assisted TDD justify the additional upfront investment in test creation. Analysis shows typical costs of $0.31 per five-iteration development cycle using GPT-4, translating to ~$0.6 saved per development hour compared to traditional approaches.

More significant savings come from:

- Reduced debugging time in production environments

- Faster feature development cycles with higher confidence

- More comprehensive test coverage without proportional effort increases

- Consistent code quality across team members with varying skill levels

Cost optimisation strategies include using less expensive models for boilerplate generation while reserving premium models for complex business logic, implementing token limits to prevent runaway costs, and maintaining conversation context to avoid regenerating similar code patterns.

Integration with Real Development Workflows

Success requires integration with existing development practices rather than wholesale replacement. Smart test selection using machine learning predicts which tests are most likely to fail given specific code changes, enabling targeted execution that maintains coverage while reducing CI/CD time.

Progressive complexity introduction starts with simple test cases and gradually adds complexity as basic functionality proves stable. This approach prevents overwhelming developers with simultaneous failures while ensuring comprehensive validation of all requirements.

Ready Set Cloud's implementation shows this approach reducing development time by approximately 50% for typical business applications, while Carvana reports that specifications can be "converted to production code in minutes" while maintaining quality standards.

Starting Implementation

Organisations should begin with well-defined components that have clear, measurable requirements. The implementation process involves:

- Create detailed specifications with formal constraints and unambiguous acceptance criteria

- Generate comprehensive test suites using AI tools that understand the full specification context

- Validate test quality through mutation testing and coverage analysis before implementation

- Generate implementation code iteratively using tests as verification checkpoints

- Integrate systematically with existing development workflows and quality processes

Success requires treating AI as a powerful implementation tool rather than a replacement for human architectural judgment. Developers maintain control over system design and business logic while delegating mechanical implementation tasks to AI systems.

Why This Changes Everything

AI-assisted TDD addresses the fundamental reliability problems that made standalone AI code generation unsuitable for production systems. By providing clear specifications through tests, the approach transforms AI from an unpredictable creative tool into a systematic implementation engine. AI is most powerful when it has a clear target result for comparison.

The research indicates that specification-driven development with AI assistance will become standard practice for many software projects. Organizations investing in these approaches now position themselves to leverage increasingly sophisticated AI capabilities while maintaining the reliability and maintainability standards essential for production systems.

The skepticism about AI code generation was justified, until TDD provided the missing framework that makes it work reliably. With proper constraints and verification, AI generation becomes not just viable but transformative for software development efficiency and quality.

Otar