Let’s say you know that your metrics changed at a certain point of time. How could you leverage this to learn what exactly has changed?

Sometimes it's possible to reduce a practical problem down to a well-studied one.

In this case it’s possible to turn “What Changed?” into a Classification Problem.

- Label data

- Everything before the suspected change → control = 0

- Everything after the suspected change → treatment = 1

- Train a classifier to predict 0 vs 1 using only impression-level features (geo, publisher, app, creative, campaign, etc. but never use time as a feature as it’s a target leak)

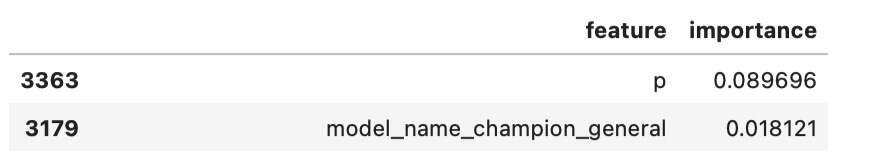

- Look at feature importances → the top ones are exactly what changed.

That’s it, the classifier is forced to find the patterns that best separate “old world” from “new world”.

Why this works so well

- Gradient-boosted trees (LightGBM, XGBoost, CatBoost) are perfect here: they handle mixed data types, missing values, and give you reliable feature importances.

- Because there is no time in the dataset, the model can’t cheat with ‘it’s later → label 1’.

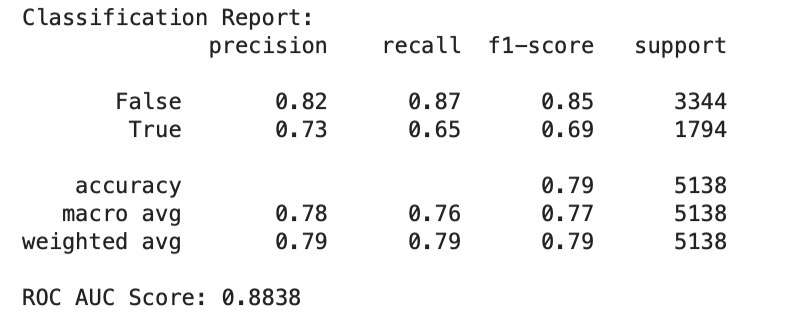

import pandas as pd from sklearn.model_selection import train_test_split from sklearn.preprocessing import StandardScaler, OneHotEncoder from sklearn.compose import ColumnTransformer from sklearn.pipeline import Pipeline from sklearn.ensemble import RandomForestClassifier from sklearn.metrics import classification_report, roc_auc_score df = pd.read_parquet('data.pq').assign( # label 'before' and 'after' y=lambda x: x.t <= '2025-09-09' ) numerical_features = ['p'] # specify your numeric columns categorical_features = df.drop(columns=['t','y'] + numerical_features).columns.tolist() target = 'y' # trying to pre X = df[categorical_features + numerical_features] y = df[target] # Split data into training and testing sets X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42, stratify=y) # Create preprocessing pipeline preprocessor = ColumnTransformer( transformers=[ ('cat', OneHotEncoder(handle_unknown='ignore', sparse_output=False), categorical_features), ('num', StandardScaler(), numerical_features) ]) # Create pipeline with preprocessor and RandomForestClassifier model = Pipeline(steps=[ ('preprocessor', preprocessor), ('classifier', RandomForestClassifier(n_estimators=100, random_state=42, n_jobs=-1)) ]) # Fit the model model.fit(X_train, y_train) y_pred = model.predict(X_test) y_pred_proba = model.predict_proba(X_test)[:, 1] # Evaluate the model print("Classification Report:") print(classification_report(y_test, y_pred)) print(f"ROC AUC Score: {roc_auc_score(y_test, y_pred_proba):.4f}") pd.DataFrame({ 'feature': (model.named_steps['preprocessor'] .named_transformers_['cat'] .get_feature_names_out(categorical_features) .tolist() + numerical_features), 'importance': model.named_steps['classifier'].feature_importances_ }).sort_values(by='importance', ascending=False).head(10)